Emotional Speech Recognition Emotional Speech Recognition Emotion is an important factor in communication. For example, a simple text dictation that does not reveal any emotion, it does not covey adequately the semantics of the text.

An emotion speech synthesizer could solve such a communication problem. Speech emotion recognition systems can be used by disabled people for communication, by actors for emotion speech consistency as well as for interactive TV, for constructing virtual teachers, in the study of human brain malfunctions, and the advanced design of speech coders. Until recently many voice synthesizers could not produce faithfully a human emotional speech. This results to an unnatural and unattractive speech. Nowadays, the major speech processing labs worldwide are trying to develop efficient algorithms for emotion speech synthesis as well as emotion speech recognition.

Dataset Description Dataset Summary. TURkish Emotional Speech database (TURES), which includes 5100 utterances extracted from 55 Turkish movies, was constructed.

To achieve such ambitious goals, the collection of emotional speech databases is a prerequisite. Our purpose is to design a useful tool which can be used in psychology to automatically classify utterances into five emotional states such as anger, happiness, neutral, sadness, and surprise. The major contribution of our investigation is to rate the discriminating capability of a set of features for emotional speech recognition.

Example Of Emotional Speech

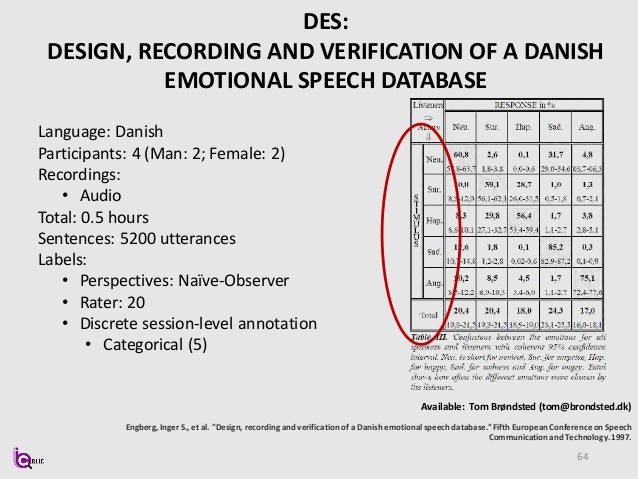

A total of 87 features has been calculated over 500 utterances from the Danish Emotional Speech database. The Sequential Forward Selection method (SFS) has been used in order to discover a set of 5 to 10 features which are able to classify the utterances in the best way. The criterion used in SFS is the crossvalidated correct classification score of one of the following classifiers: nearest mean and Bayes classifier where class pdfs are approximated via Parzen windows or modelled as Gaussians.

Emotional Speech Topics

After selecting the 5 best features, we reduce the dimensionality to two by applying principal component analysis. The result is a 51.6% +- 3% correct classification rate at 95% confidence interval for the five aforementioned emotions, whereas a random classification would give a correct classification rate of 20%. Furthermore, we find out those two-class emotion recognition problems whose error rates contribute heavily to the average error and we indicate that a possible reduction of the error rates reported in this paper would be achieved by employing two-class classifiers and combining them.

Abstract Abstract. Thirty-two emotional speech databases are reviewed. Each database consists of a corpus of human speech pronounced under different emotional conditions. A basic description of each database and its applications is provided. The conclusion of this study is that automated emotion recognition cannot achieve a correct classification that exceeds 50% for the four basic emotions, i.e., twice as much as random selection. Second, natural emotions cannot be easily classified as simulated ones (i.e., acting) can be. Third, the most common emotions searched for in decreasing frequency of appearance are anger, sadness, happiness, fear, disgust, joy, surprise, and boredom.